How To Draw Loss

How To Draw Loss - Safe to say, detroit basketball has seen better days. Now, after the training, add code to plot the losses: Loss_values = history.history['loss'] epochs = range(1, len(loss_values)+1) plt.plot(epochs, loss_values, label='training loss') plt.xlabel('epochs') plt.ylabel('loss') plt.legend() plt.show() A common use case is that this chart will help to visually show how a team is doing over time; Web the loss of the model will almost always be lower on the training dataset than the validation dataset. I use the following code to fit a model via mlpclassifier given my dataset: Web you are correct to collect your epoch losses in trainingepoch_loss and validationepoch_loss lists. Call for journal papers guest editor: Web i am new to tensorflow programming. Web line tamarin norwood 2012 tracey: Web how to appropriately plot the losses values acquired by (loss_curve_) from mlpclassifier. To validate a model we need a scoring function (see metrics and scoring: Though we can’t anything like a complete view of the loss surface, we can still get a view as long as we don’t especially care what view we get; Loss at the end of. Accuracy, loss in graphs you need to run this code after your training we created the visualize the history of network learning: I would like to draw the loss convergence for training and validation in a simple graph. After completing this tutorial, you will know: I want the output to be plotted using matplotlib so need any advice as im. Web line tamarin norwood 2012 tracey: Web how can we view the loss landscape of a larger network? Though we can’t anything like a complete view of the loss surface, we can still get a view as long as we don’t especially care what view we get; The proper way of choosing multiple hyperparameters of an estimator is of course. Web anthony joshua has not ruled out a future fight with deontay wilder despite the american’s shock defeat to joseph parker in saudi arabia. In addition, we give an interpretation to the learning curves obtained for a naive bayes and svm c. Though we can’t anything like a complete view of the loss surface, we can still get a view. Web in this tutorial, you will discover how to plot the training and validation loss curves for the transformer model. I have chosen the concrete dataset which is a regression problem, the dataset is available at: Web december 13, 2023 at 4:11 p.m. Running_loss = 0.0 for i, data in enumerate(trainloader, 0): Web how to appropriately plot the losses values. This means that we should expect some gap between the train and validation loss learning curves. I would like to draw the loss convergence for training and validation in a simple graph. How to modify the training code to include validation and test splits, in. Safe to say, detroit basketball has seen better days. Web so for visualizing the history. Web december 13, 2023 at 4:11 p.m. I have chosen the concrete dataset which is a regression problem, the dataset is available at: Loss_values = history.history['loss'] epochs = range(1, len(loss_values)+1) plt.plot(epochs, loss_values, label='training loss') plt.xlabel('epochs') plt.ylabel('loss') plt.legend() plt.show() Adding marks to paper sets up a mimetic lineage connecting object to hand to page to eye, creating a new and lasting. I want the output to be plotted using matplotlib so need any advice as im not sure how to approach this. Loss_vals= [] for epoch in range(num_epochs): Adding marks to paper sets up a mimetic lineage connecting object to hand to page to eye, creating a new and lasting image captured on the storage medium of the page. Call for. I use the following code to fit a model via mlpclassifier given my dataset: Tr_x, ts_x, tr_y, ts_y = train_test_split (x, y, train_size=.8) model = mlpclassifier (hidden_layer_sizes= (32, 32), activation='relu', solver=adam, learning_rate='adaptive',. Accuracy, loss in graphs you need to run this code after your training we created the visualize the history of network learning: Loss at the end of each. It was the pistons’ 25th straight loss. Web now, if you would like to for example plot loss curve during training (i.e. Web december 13, 2023 at 4:11 p.m. In this post, you’re going to learn about some loss functions. We have demonstrated how history callback object gets accuracy and loss in dictionary. Joshua rolled back the years with a ruthless win against. Call for journal papers guest editor: That is, we’ll just take a random 2d slice out of the loss surface and look at the contours that slice, hoping that it’s more or less representative. Epoch_loss= [] for i, (images, labels) in enumerate(trainloader): In this post, you’re going to learn about some loss functions. # rest of the code loss.backward() epoch_loss.append(loss.item()) # rest of the code # rest of. I want to plot training accuracy, training loss, validation accuracy and validation loss in following program.i am using tensorflow version 1.x in google colab.the code snippet is as follows. Accuracy, loss in graphs you need to run this code after your training we created the visualize the history of network learning: Web for epoch in range(num_epochs): To validate a model we need a scoring function (see metrics and scoring: I use the following code to fit a model via mlpclassifier given my dataset: Web in this tutorial, you will discover how to plot the training and validation loss curves for the transformer model. I have chosen the concrete dataset which is a regression problem, the dataset is available at: Web how to appropriately plot the losses values acquired by (loss_curve_) from mlpclassifier. For optimization problems, we define a function as an objective function and we search for a solution that maximizes or minimizes. Web the loss of the model will almost always be lower on the training dataset than the validation dataset.

Pin on Personal Emotional Healing

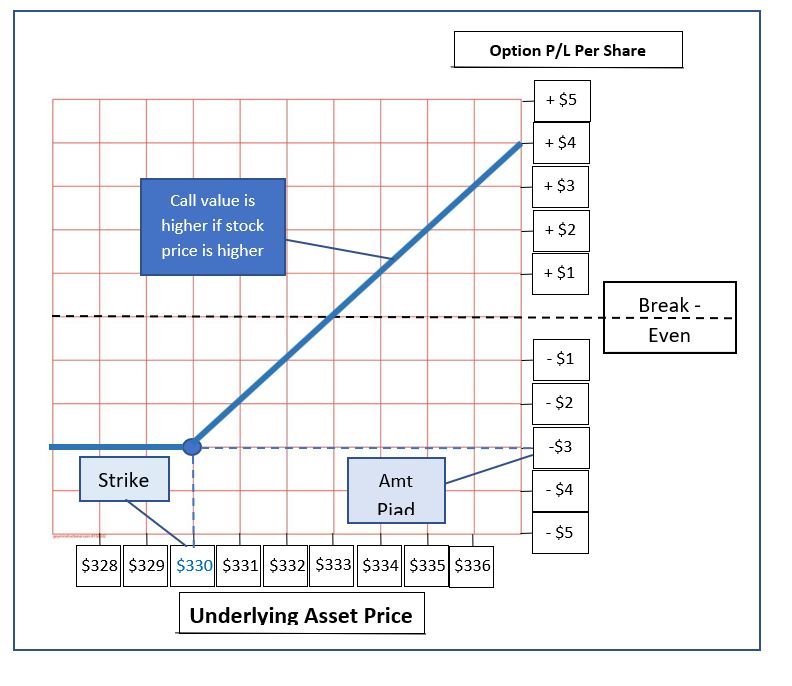

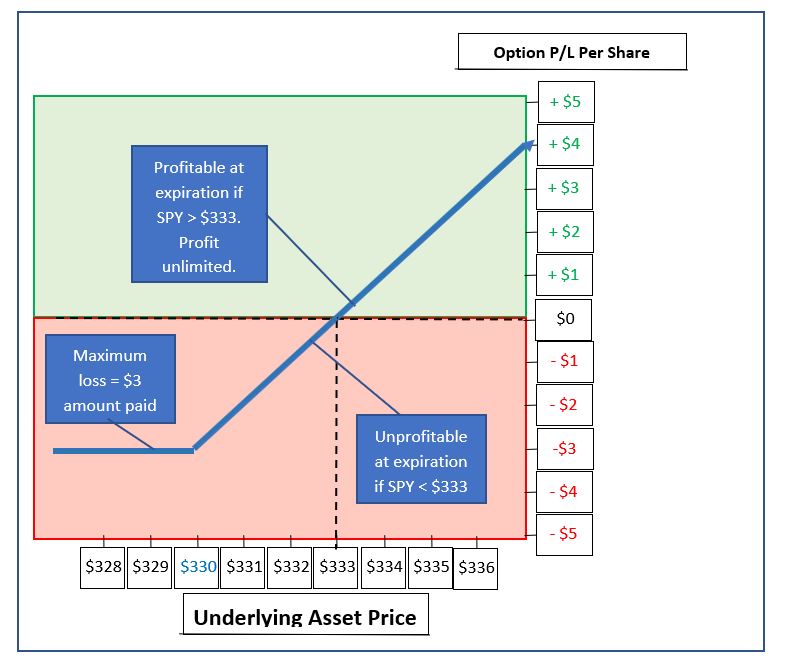

Drawing and Filling Out an Option Profit/Loss Graph

Pinterest

Miscarriage sketch shows the 'pure grief' of loss

Sorry for Your Loss Card Sympathy Card Hand Drawing Etsy UK

Pin on Death and Grief

Drawing and Filling Out an Option Profit/Loss Graph

How to draw the (Los)S thing r/lossedits

35+ Ideas For Deep Pain Sad Drawings Easy Sarah Sidney Blogs

35 Ideas For Deep Pain Sad Drawings Easy

Quantifying The Quality Of Predictions ), For Example Accuracy For Classifiers.

Web How Can We View The Loss Landscape Of A Larger Network?

Web 1 Tensorflow Is Currently The Best Open Source Library For Numerical Computation And It Makes Machine Learning Faster And Easier.

In Addition, We Give An Interpretation To The Learning Curves Obtained For A Naive Bayes And Svm C.

Related Post: